Reducing Bias to Increase Test Set Accuracy

Motivation

Visual insight is an important tool that allows engineers to understand what a deep neural network is doing. Not only to satiate curiosity but also to give insight that can be used for the optimization of machine learning algorithms. This article will give a brief overview of a technique to identify biases in the categorization of an object allowing the team can focus on how to reduce the bias and increase the test set accuracy.

At best, high bias models will not generalize well to a production environment. At worst it may even perpetuate stereotypes in race, gender, and age that may cause legal ramifications for the company and a poor experience for the users.

Bias Identification

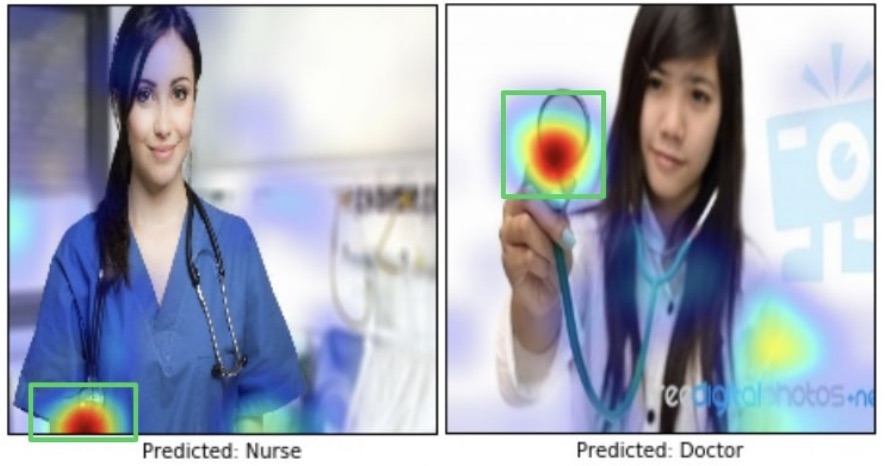

The example given in the Grad-CAM research shows an ImageNet pretrained VGG-16 model used in a binary classification task of doctor vs nurse. In this case, the gender-balanced test set generalizes poorly even though it achieved good validation accuracy. When looking at the class activation maps for two detections, there is some data as to why that may be the case.

In the figure above, it seems the model was focused on the face to decide if the person was a nurse. This would easily strike most humans as an inaccurate method. With this visual insight, the model can be optimized to have a higher test rate accuracy.

Ah, much better. It seems the model is now focused on the coat, sleeves, and stethoscope which intuitively makes sense. The retrained model can be optimized to retrain better and look at the right regions

Outro

This article didn’t go over the map or implementation as that’s all available in the paper. The main takeaway is exposure to various tools that can be used while creating a product to make sure it serves its users.